Openshift ingress setup [UPDATE]

A lot of OpenShift user want to use more external available ports to reach Applications inside a OpenShift cluster then 80 or 443. I describe here how you can setup a so called ingress port and some pitfalls which could happen and how you can prevent these.

First of all you can find the official Openshift Container Platform at Using a NodePort to Get Traffic into the Cluster.

Pre-requirement

| What | Description | Example Value |

|---|---|---|

| External IP / Range | To be able to connect to the external IP you MUST use another IP range then the one which is in use for Services and Nodes in the Openshift SDN | 10.20.10.11/32 or 10.20.20.0/25 |

| Firewall port open | If you have a external firewall in front of OSCP you MUST open the port to be able to connect to this port | Dynamic assigned by openshift. see ServicesNodePortRange |

| Cluster admin right | Due to the fact that you will need to give a service account the hostaccess rights you will need to add cluster-admin rights to this service account. | |

| Dedicated Node | You need to decide if you want to run the ingress route on dedicated nodes or on the OCP Router |

Introduction

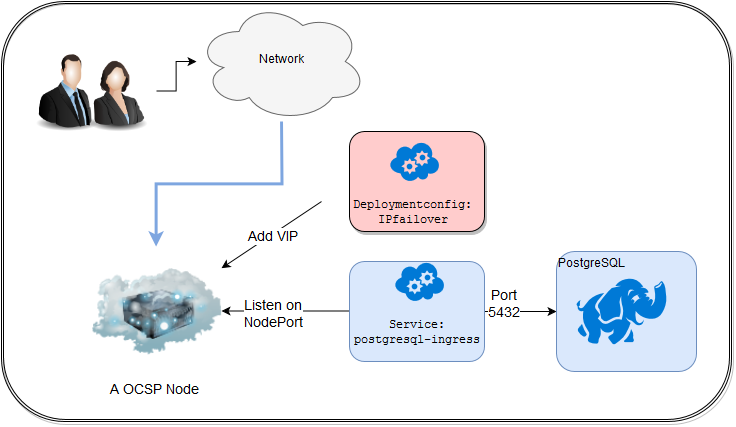

The setup looks like this.

The ipfailover setup is the same as the one described in High Availability for the cluster. Due to this fact the concept is well tested and works quite nice.

The Deployment Config(DC) postgresql-ingress must be from the Type LoadBalancer. I will use the configs from the documentation Getting Traffic into the Cluster.

Setup

Master config

You will need to add the decided external network-range in the master-config.yml

networkConfig:

ingressIPNetworkCIDR: 10.20.20.0/25

and you should add it also the ansible inventory file.

openshift_master_ingress_ip_network_cidr=10.20.20.0/25

You are be able to add this line into the ansible inventory file which opens then the ports on the nodes.

openshift_node_port_range=30000-32000

Which ever way you choose, you must restart the master(s) to activate the settings.

New project

First of all login to a Openshift Container Plattform and create a project which you want to use for ingress port and create the postgresql app.

oc login ....

oc new-project my-ingress

oc new-app postgresql-ephemeral

Serviceaccount

Due to the fact that the keepalived, the software behind ipfailover, must bind to the host/node port requires the service account hostnetwork privileges.

$ oc create serviceaccount ipfailover

$ oc adm policy add-scc-to-user hostnetwork \

system:serviceaccount:my-ingress:ipfailover

Additional Service

To be able to access the postgresql from outside the cluster we create a new service additional to the one which create the new-app generator.

$ oc expose dc postgresql --name postgresql-ingress --type=LoadBalancer

get nodePort

At this point the OpenShift will use a random port to listen on node.

You can execute the command below to get the port which was selected for the postgresql-ingress service.

oc get svc postgresql-ingress -o jsonpath='{.spec.ports[?(@)].nodePort}'

IPFailover

I use for a high availability solution for the ingress port the out of the box available ipfailover feature from OpenShift.

oadm ipfailover ipf-ha-postgresql \

--replicas=1 --selector="region=infra-ingress" \

--virtual-ips=10.20.20.100 --interface=eth1 --watch-port=<THE_NODEPORT_INGRESS_SVC> \

--service-account=ipfailover —create

Now cross the fingers and see if the ip is up ;-)

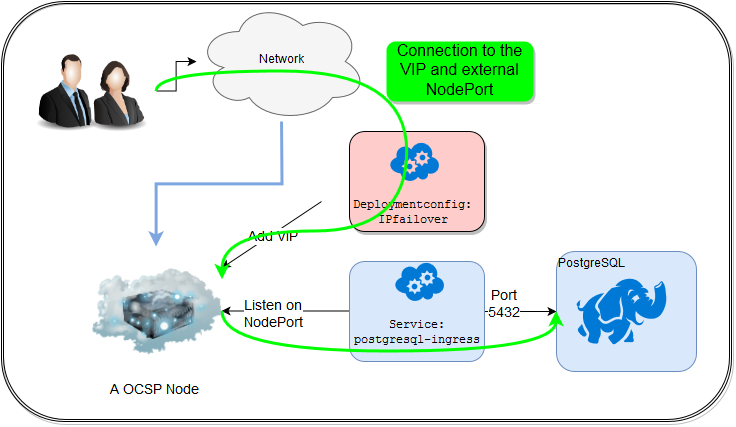

The flow looks like this.

Pitfalls

Node Selector

To be able to run in one project pods on different nodes you will need to set the annotation openshift.io/node-selector to a empty value.

$ oc patch ns my-ingress \

-p '{"metadata": {"annotations": {"openshift.io/node-selector": ""}}}'

It is your decision where the ingress pods ( external IP ) should be setuped.

Connection errors

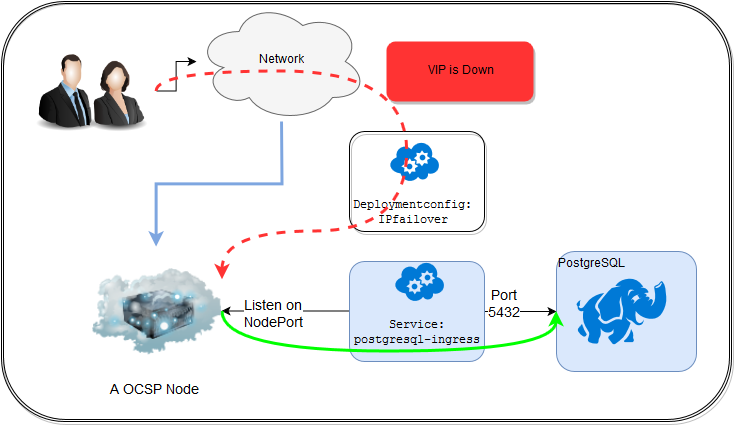

When the connection was successfully initiated you can scale down the ipfailover pods and the db connection is still alive, why?

Well the key is the postgresql-ingress as long as this service is up you will have a valid connection.

Nodeports lower then 30000

When you want to use a another nodportrange then you will need to change the servicesNodePortRange

on all masters in the master-config.yml